2D SVM and line equations

SVM starts as 2D idea with math behind it looking like this.

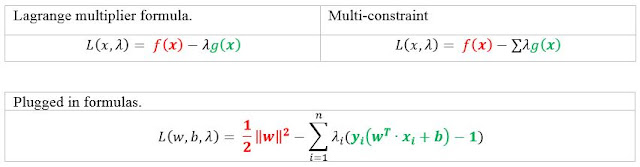

(n)D SVM and Lagrange multiplier

Then higher dimensional data and linearly inseparable problems are taken in to consideration, which starts using multidimensional variables with several constraints and leads to maximization ideas from Lagrange and there the line equation is fit in to Lagrange formula.

Further this formula is gradually simplified to. Where K is a kernel or a function to which you pass vectors and measure their distances in higher/different dimensions.

SVM formula with constraints

SVM Kernels

Some of the Kernels (Author may update this later with actual formulas). You could look at these and see that they are just a way to differently measure the distances between vectors, instances or rows. More about it in SVM interpretation section.

- Linear or dot product.

- Gaussian, which uses euclidean distance.

- RBF, which uses Gaussian.

- Exponential, which looks like simpler version of Gaussian.

- Polynomial, which uses probabilities and dot product.

- Hybrid, which is mix of polynomial and gaussian.

- Sigmoidal, step function used in neural networks.

Possible transformations between Lagrange SVM and final formula

Some possible transformations in between Lagrange and final SVM formula may look like this.

Making Machine Learning Awesome

ReplyDelete