Learning

It

finds closest and furthest vectors for each label pair.

public void findAllClosestAndFurthest(List<OneVsOtherBox>

labelPairs, SimilaritySettingsBox closest, SimilaritySettingsBox furthest) {

for (int i = 0; i < labelPairs.size(); i++) {

OneVsOtherBox labelPair = labelPairs.get(i);

findClosestAndFurthest(labelPair, closest, furthest);

}

}

Find

closest and furthest uses find with Boolean which sets what to find.

for (int i = 0; i < targets.size(); i++) {

VectorBox

target = targets.get(i);

VectorBox

closestVector = closestOrFurthest(findClosestOrFurthestThis, target, settings, closest);

if (closestVector != null) {

if (closest) {

// if it is not already used

if (closestVector.getClosest() == 0) {

closestOrFurthestVectors.add(closestVector);

It

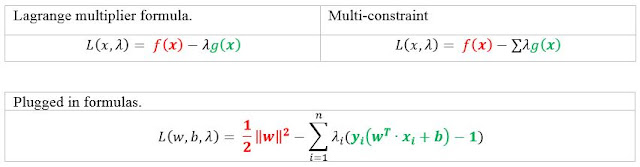

uses settings which contain the pointers to formulas which measure the

distances to try and solve inseparable problem.

public double euclidean(VectorBox a, VectorBox b) {

return v.euclideanDistance(a, b);

}

public double linear(VectorBox a, VectorBox b) {

return v.dotProduct(a, b);

}

public double RBF(VectorBox a, VectorBox b, double ro) {

double gamma = 1 / (2 * v.square(ro));

return gaussian(a, b, gamma);

}

public double gaussian(VectorBox a, VectorBox b, double gamma) {

Predicting

Various

modifications have been done to prevent high precision because of how data is

sorted and how class labels are counted. Shuffling and hash maps gave the most

reliable results.

For each

label pair, check which class the unknown value might be.

public

List<VectorBox> predict(List<VectorBox> predictData, SettingsBox settings) {

currentSettings = settings;

Collections.shuffle(predictData);

return predict(predictData);

}

public

List<VectorBox> predict(List<VectorBox> data) {

resetDataLabels(data);

for (int i = 0; i < data.size(); i++) {

assignLabels(data.get(i));

}

return data;

}

The

“assignLabels” method iterates through furthest vectors and measures radiuses

in the way it is described in the design section.

public void assignLabels(VectorBox

unknownLabel) {

for (int i = 0; i < labelPairs.size(); i++) {

OneVsOtherBox l = labelPairs.get(i);

assignLabel(unknownLabel, l, currentSettings.getPredictSimilaritySettings(), l.getOneLabel());

}

}

The

“inSphere” method checks if new unknown value is in the class representing

sphere. It iterates through each furthest vector, then finds closest opposite

class vector. Using this vector it finds closest opposite class vector again,

but this time it is its own closest vector. These are used to find midpoint and

then radius which represents class points inside the radius. The next iteration

checks each closest vector and their own circle radius in case they are not in range

of furthest vector radius.

public boolean

inSphere(VectorBox unknownLabel,

List<VectorBox> closestOnes, List<VectorBox> furthestOnes, List<VectorBox> closestOthers, SimilaritySettingsBox similaritySettings) {

for (int i = 0; i < furthestOnes.size(); i++) {

//

furthestOne--->closestOne---><---closestOther<---furthestOther

VectorBox furthestOne = furthestOnes.get(i);

//

furthestOne-----------------><---closestOtherToFurthestOne------------

VectorBox closestOtherToFurthest = findClosest(furthestOne, closestOthers, similaritySettings);

//

--------->closestOneToClosestOther---><---closestOtherToFurthestOne---

VectorBox closestOneToClosestOther = findClosest(closestOtherToFurthest, closestOnes, similaritySettings);

VectorBox midPoint = extraMath.middle(closestOtherToFurthest, closestOneToClosestOther);

double midDist = similarity(midPoint, closestOtherToFurthest, similaritySettings);

double safeDist = similarity(furthestOne, closestOtherToFurthest, similaritySettings) - midDist;

safeDist *= currentSettings.getPredictionSearchSensitivity();

double distance = similarity(unknownLabel, furthestOne, similaritySettings);

if (distance <= safeDist) {

return true;

}

}

for (int j = 0; j < closestOnes.size(); j++) {

VectorBox closestOne = closestOnes.get(j);

VectorBox closestOther = findClosest(closestOne, closestOthers, similaritySettings);

double dist1 = similarity(unknownLabel, closestOne, similaritySettings);

VectorBox midP = extraMath.middle(closestOne, closestOther);

double midDist = similarity(midP, closestOne, similaritySettings);

if (dist1 <= midDist) {

return true;

}

}

return false;

}

Inseparable

problem solution by using slack variable can be seen inside the above code

snippet as “safeDist*=currentSettings.getPredictionSearchSensitivity()”. It

modifies the sphere radius by multiplying it with given value.

Slow Machine

To

improve learning the option to search for best learning subset was also

implemented. It randomly creates subsets of learn data, learns it, predicts,

reset original data to unknowns, then compares the prediction precision and

tracks which was the best subset. Due to difficulties and complexity for

calculating precision when using subsets, these were not used for results even

though it had better results. One of those difficulties arise when subset becomes

vectors of one class label, which results to 100% precision because there is no

other class.

public

List<VectorBox> tryFindBestTrainSet(List<VectorBox> learn, List<VectorBox> predict, SettingsBox s, int times, int subsetSize) {

List<List<VectorBox>>

randomSubsets = getRandomSubsets(learn, times, subsetSize);

double[] results = new double[times];

double high = 0;

for (int i = 0; i < times; i++) {

List<VectorBox> randomSubset = randomSubsets.get(i);

FastMachine lss = new FastMachine(s);

lss.learn(randomSubset);

resetDataLabels(predict);

List<VectorBox> predicted = lss.predict(predict);

results[i] =

predictonPrecision(predicted, learn);

if (results[i] > high) {

high = results[i];

addDisplayInfo("Trying to find best learning subset: " + "Try: " + (i + 1) + " Prediction: " + high + "%");

}

}

double highest = results[0];

List<VectorBox>

bestTrainingSubset = randomSubsets.get(0);

for (int i = 0; i < results.length; i++) {

if (highest < results[i]) {

highest = results[i];

bestTrainingSubset = randomSubsets.get(i);

}

}

return bestTrainingSubset;

}

Find

best settings and find best closest similarity settings use randomly generated

settings and “FastMachine” multiple times by comparing the prediction

precision.